FAKE NEWS, CONSPIRACY THEORIES, MANIPULATION…: A NEUTRAL SOLUTION

[Translated with DeepL, the best translation AI on this planet. No editing applied.]

It has become a sad normal state of affairs: Every day one has to take note of new media reports about the latest examples of fake news, disinformation, propaganda, conspiracy theories, etc. etc. And what solutions are proposed for this problem? Fact checks, rebuttals, content filters. Hardly anyone wants to go as far as, for example, Sacha Baron Cohen, who proposed a mandatory check of all postings before they are published - and to make Zuckerberg personally liable for the content that his company disseminates, if necessary:

"Maybe it's time to tell Mark Zuckerberg and the CEOs of these companies: you already allowed one foreign power to interfere in our elections, you already facilitated one genocide in Myanmar, do it again and you go to jail."

The catch with all the well-intentioned proposals to publish content only after it has been reviewed: with four million Facebook posts shared per minute and 500 million tweets per day (as of spring 2020), this is an absurdity. Even if only a small percentage would need to be reviewed, the problem remains: how to find those needles in the haystack? Either in "manual work" or automatically. Neither option is convincing. Human reviewers are completely overwhelmed by the mass of content, and even as they search and sort out, new garbage is posted. This "case-by-case principle" - individual posts are reviewed by individual humans - is unworkable. Moreover, there is no sharp line between "true" and "false" in many cases. What to do with half-truths? Distortions? Exaggerations? Distortions? In general, who decides what can and cannot be published? What content is covered by the right to "freedom of expression" and what is no longer? In which cases is this right claimed unjustifiably and only pretended for tactical reasons? Where does censorship begin and where does it end? I don't want to go over all the justified objections again. What humans already can't manage unambiguously, an artificial "intelligence" will manage even less. No algorithm, no matter how "smart" it is, will ever achieve the necessary accuracy to distinguish between right and wrong. The problem lies in the fact that attempts are still being made to deal with the problem at the content level, albeit with the means of AI. Both human and machine verification of content is doomed to failure.

IF A SYSTEM IS THE PROBLEM, THE SOLUTION CAN ALSO ONLY LIE IN A SYSTEM CHANGE

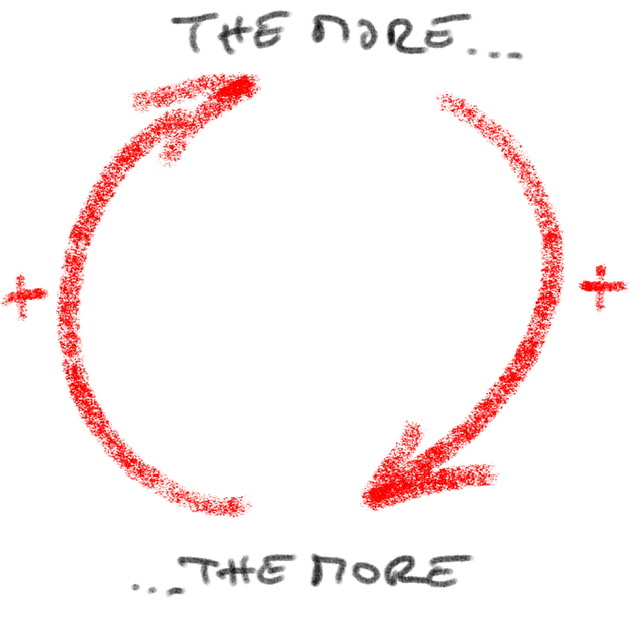

The "success principle" of network media is well known: The more clicks, the more money. The more clicks, the greater reach, the higher advertising revenue, the more profits, the more company growth, the more distribution… So the basic problem is a so-called system: “the more, the more”.

We encounter this system dynamic nowadays wherever we look: The more quota, the more circulation, the more quota. The more votes, the more political power, the more voters. The more capital, the more assets, the more capital ("he who has will have"). The more positive guest feedback on Booking.com, Airbnb, etc., the more bookings, the more market dominance of these platforms, the more bookings, the more guest feedback; and so on and so forth. This principle, which everyone knows from acoustic feedbacks (feed-backs) is a "self-reinforcing cycle". The fatal thing about such cycles is that they grow like an avalanche until they either hit a limiting factor that slows them down, or destroy the system.

This is also the case with the network media and their above-mentioned content. Their "success principle" is also "the more, the more." And they, too, push the self-reinforcing feedback to the extreme until the thus polarized society tears itself apart in a civil war-like struggle of the opposing camps. Because the problem is a systemic one, it also needs a systemic solution - a limiting factor that puts the brakes on self-reinforcement in time.

THE SOLUTION: AN AUTOMATIC THROTTLING ALGORITHM

Such an algorithm is based on the principle of a mechanical speed governor:

The more energy is supplied, the faster the balls spin. The faster they spin, the further outward the centrifugal force pulls them. The further the balls move outward, the higher they pull the weight upward on their axis. The further it moves upward, the more - and this is where the "limiting factor" comes into play - the energy supply is reduced via lever rods. The weights lower, and a balance between energy supply and speed is established. In this way, a constant speed can be achieved in a mill or an engine, for example, which was first used by James Watt in the steam engines he invented.

It has many applications in various types of emergency brakes and automatic speed throttles, such as

- Securing elevators when the rope breaks

- Emergency brakes for wind turbines that are automatically brought to a stop during storms

- Abseiling and belaying devices in sport climbing that prevent an uncontrolled pull, i.e., an unbraked fall

- Centrifugal brakes of fishing reels, thanks to which it is not necessary to slow down the unwinding of the line manually

Generally speaking, this principle is as follows: the greater the energy input, the greater the spin, which, however, constantly slows itself down due to automatic throttling, so that a state of equilibrium (a constant speed) is established. Even if the energy supply cannot be decreased, then one can still regulate its effect at will with such a throttle.

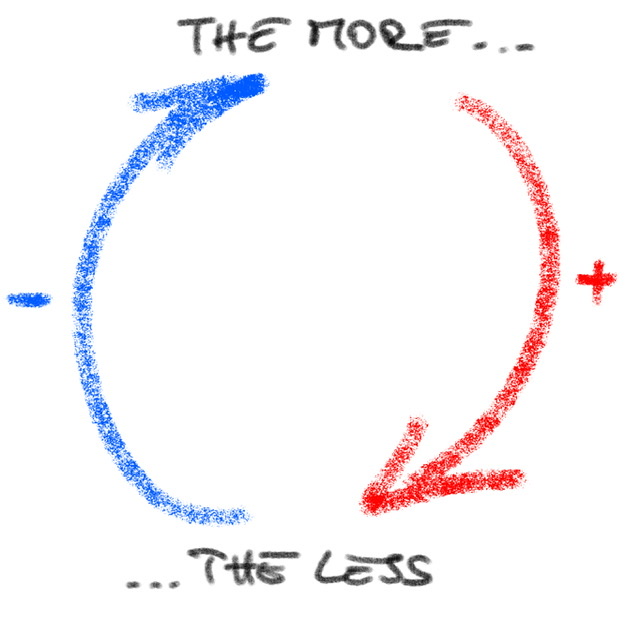

Applied to network media, such a throttling algorithm could work like this: The more people see / "like" / share a post, the greater its "spin." The amount of contributions (the "energy supply") can hardly be slowed down; but their momentum can: the greater their spin, the more it could be slowed down by such a throttling algorithm, possibly to zero. Such an automatic throttling would make any avalanche-like escalation impossible from the beginning. In principle, you only need to turn the self-amplifying algorithm upside down: minus instead of plus; instead of “the more, the more” – the more, the less.

The same applies to the notorious "bubbles" in which like-minded people reinforce and build up each other's convictions while at the same time shutting themselves off from anything that would challenge their dogmas. Instead of huge "bubbles" of hundreds of thousands of people, only tiny "bubbles" would be possible, which could no longer influence and endanger the life of society.

Such an algorithm would have none of the weaknesses of manual sorting out outlined above. It would be fast and efficient; it would not need to make any judgments, and because it would not need to distinguish between "true" and "false", it would be "infallible" a priori. It would be content-neutral. It would not be this or that content that would determine whether it should be allowed to stand or not (which inevitably smacks of censorship or is censorship), but only the systemic momentum that it triggers. This would mean that no contribution could spread "virally" any more - no matter what its content, no matter by whom and with what intentions it was put into the world. This would also forestall accusations of censorship and one-sided influence on public opinion.

This would not only make all malicious attempts to influence political splinter groups and domestic or foreign trolls superfluous. Their "leverage" to broadly manipulate society no longer works. This also makes it unnecessary to spread fake news, conspiracy theories, etc. via network media and news apps. As soon as posts get a spin, they are automatically throttled and turned off if necessary.

Beyond its immediate positive effect on the network media and their users, a throttling algorithm would also have indirect drastic consequences for their operators. Since the entire success model of Facebook and Twitter is built on the principle of the more, the more, an algorithm that counteracts precisely this feedback will bring their business model crashing down. It would not be a symptomatic measure like splitting up Facebook or Alphabet. Their real problem is not their CEOs, but the system in which these corporations function, must function, if they want to continue to exist. A throttling algorithm will have a drastic effect on this system - and probably bring it to a standstill. Because if the feedback loop The more clicks, the more money no longer works, that's it for their success model.

The network effect - the more people use a medium, the more attractive it is, which further increases the attractiveness of the medium - has led, with systemic necessity, to the quasi-monopoly formation of Facebook and Twitter, but also of Amazon, Booking.com, Google… You can no longer get around them. The throttling algorithm I propose would "incidentally" also curb the network effect and thus effectively counteract the formation of monopolies, which until now have only been fought when the baby has already fallen into the water, i.e. when the monopolies have already developed.

Facebook's Mission Statement: "give people the power to build community and bring the world closer together" would not contradict such an automatic brake; quite the contrary. While the network media are currently primarily destroying the community and polarizing the world into hostile camps, a braking algorithm could even help them nip the divisive campaigns in the bud. Then Facebook would once again be the family-and-friends medium it was intended to be at some point - before going public. But at present, the question of whether Zuckerberg, Bezos and Co. would ever shoot themselves in the knee with such a throttling algorithm is a merely rhetorical one. The current exclusive markets, especially the financial markets, cannot regulate themselves systemically, because that the more the merrier is precisely their system DNA. That's why a political show of force is needed to dictate to Facebook and Twitter: Up to a spin X you don't need to throttle, from X to Y with increasing braking effect, and from Y on 100%. But if such a word of command emerges, investors will probably be quick to put their eggs in the basket and withdraw their billions, so that intervention will be unnecessary anyway.

Will the politicians have this backbone? As much as it is still intertwined with corporations and their lobbyists, that seems unthinkable to me. It would probably require a different political system first - but that's another topic.

IF YOU LIKE IT, PLEASE SHARE THIS IDEA.